Deployment Workflows Guide

Comprehensive deployment automation covering tag cutting, environment-specific behaviors, Docker handling, and repository release process

This guide provides detailed information about our deployment workflows, covering everything from development environment automatic deployments to production tag cutting and release processes.

Deployment Architecture Overview

Environment-Specific Behaviors

Development Environment

- Trigger: Automatic on merge to main branch

- Process: Build → Test → Deploy → Sync

- Deployment: Immediate automatic deployment

- Monitoring: Continuous Argo sync monitoring

- Rollback: Automatic rollback on failure

Staging Environment

- Trigger: Manual tag cutting required

- Process: Tag → Artifact → Argo → Deploy

- Approval: Manual review and approval gates

- Testing: Full integration and user acceptance testing

- Validation: Performance and security validation

Production Environment

- Trigger: Manual tag cutting with approval workflow

- Process: Tag → Hash → Platform artifact → Argo deployment

- Controls: Multiple approval gates and change management

- Monitoring: Enhanced monitoring and alerting

- Rollback: Controlled rollback procedures with impact assessment

Tag Cutting and Release Process

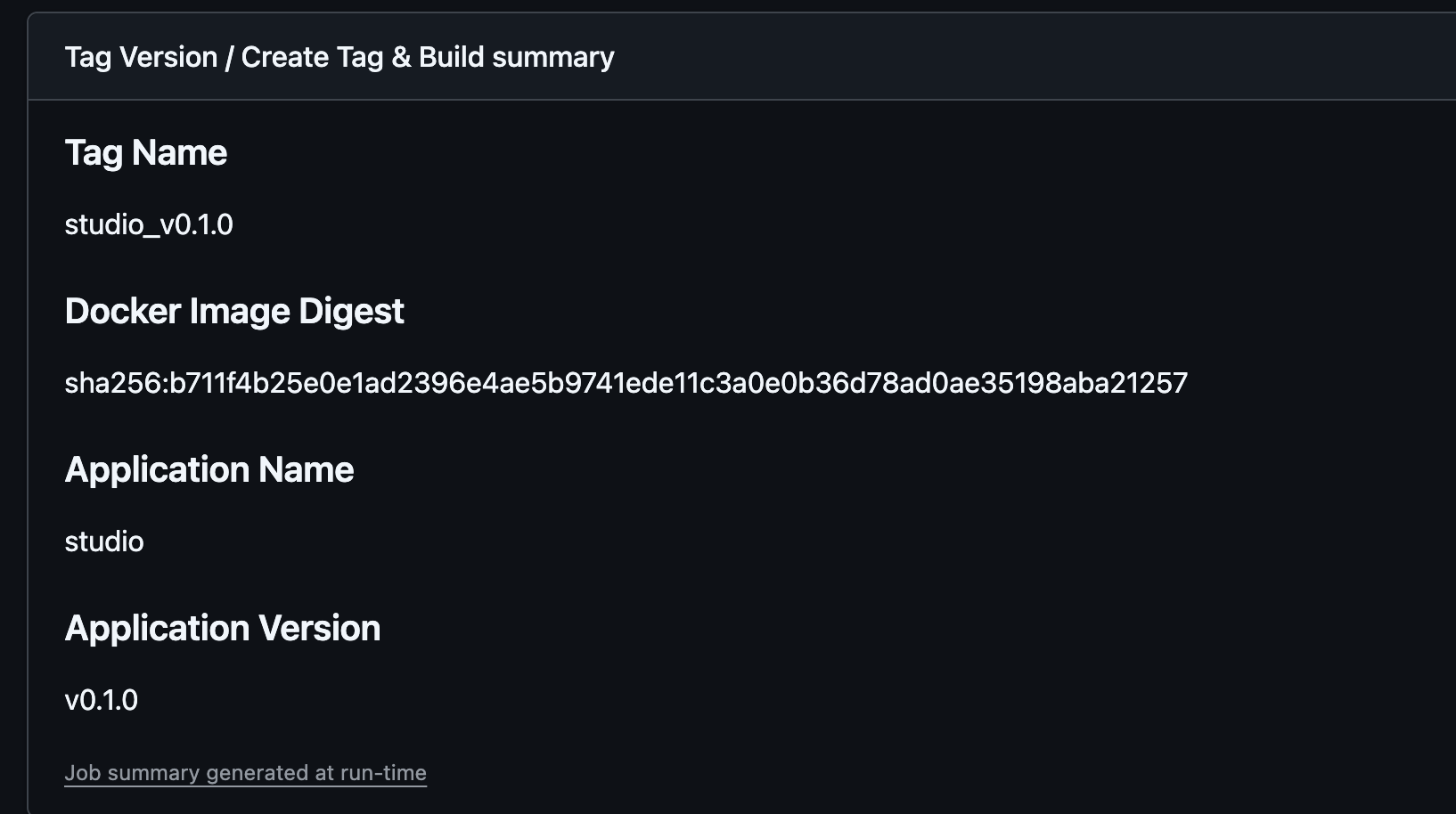

What Information is Retained During Tagging

When a tag is created and the build process completes, specific metadata is preserved and passed to ArgoCD for deployment tracking. This information provides full traceability from source code to running containers:

Key Information Retained:

- Tag Name: Semantic version identifier (e.g.,

studio_v0.1.0) - Docker Image Digest: Immutable SHA256 hash of the container image

- Application Name: Service identifier for deployment targeting

- Application Version: Version metadata for tracking and rollbacks

This comprehensive metadata ensures:

- Immutable Deployments: The exact same container image is deployed across environments

- Full Traceability: Every deployment can be traced back to its source commit and build

- Rollback Capability: Previous versions can be easily identified and restored

- Security Compliance: Image digests provide cryptographic verification of content integrity

- Audit Trail: Complete deployment history for compliance and debugging

Tag Creation Workflow

# Example: Manual tag cutting workflow

name: Create Release Tag

on:

workflow_dispatch:

inputs:

environment:

description: 'Target environment'

required: true

type: choice

options:

- staging

- production

version:

description: 'Release version (e.g., v1.2.3)'

required: true

type: string

jobs:

create-tag:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v4

- name: Create and push tag

run: |

git tag ${{ inputs.version }}

git push origin ${{ inputs.version }}

- name: Update platform artifact

run: |

# Tag + hash updates go into platform repo artifact

echo "Updating platform artifact with tag: ${{ inputs.version }}"

echo "Hash: ${{ github.sha }}"

- name: Trigger Argo deployment

run: |

# Argo picks up artifact for further processing

echo "Notifying Argo of new deployment artifact"

Platform Repository Integration

- Tag + Hash Updates: Release information is packaged into platform repository artifacts

- Argo Integration: ArgoCD monitors platform artifacts and triggers deployments

- GitOps Flow: All deployments follow GitOps principles with git-based state management

- Artifact Tracking: Complete traceability from source code to deployed version

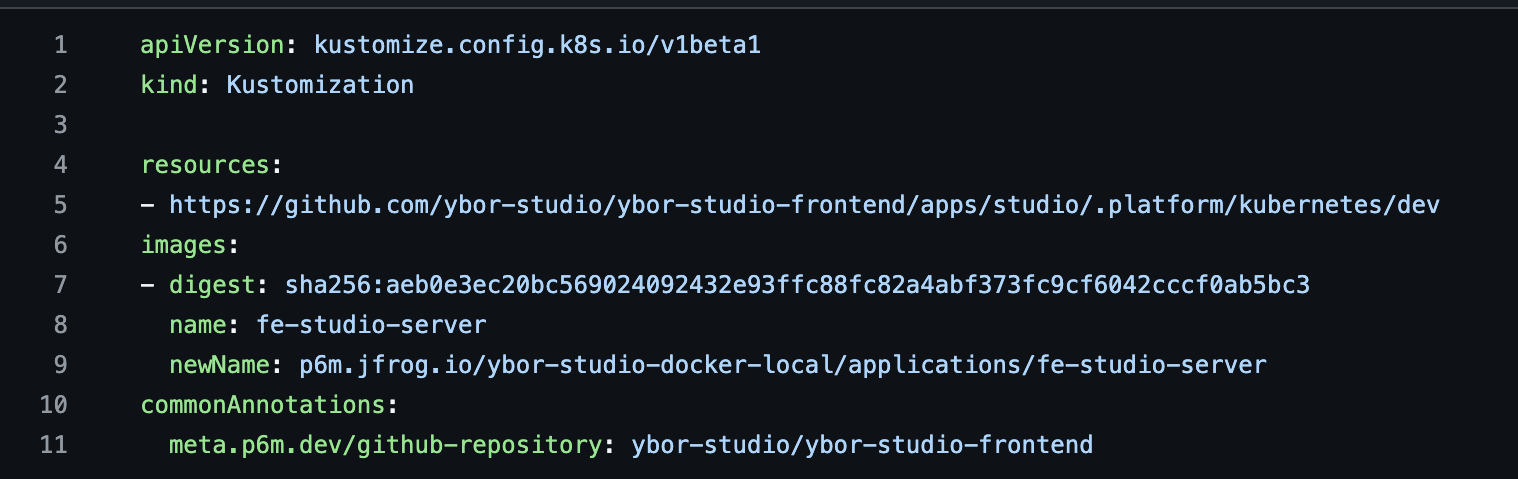

Platform Artifact Updates - The ArgoCD Trigger

When a promotion to .platform occurs, the deployment process updates the configuration files that ArgoCD monitors. This is the crucial mechanism that triggers ArgoCD to perform a redeployment.

Platform Artifact Update Process:

- Tag Creation: Developer or automation creates a release tag

- Artifact Generation: CI/CD pipeline packages release information into platform artifacts

- Configuration Update: Platform repository configuration files are updated with:

- New image tags

- Updated SHA digests

- Environment-specific configurations

- Resource specifications

- ArgoCD Detection: ArgoCD continuously monitors the platform repository for changes

- Automatic Sync: ArgoCD detects configuration changes and triggers redeployment

- Health Validation: ArgoCD ensures the deployment reaches desired state

Key Files Updated:

# kustomization.yaml example

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

resources:

- https://github.com/ybor-studio/ybor-studio-frontend/apps/studio/.platform/kubernetes/dev

images:

- name: fe-studio-server

newName: p6m.jfrog.io/ybor-studio-docker-local/applications/fe-studio-server

digest: sha256:aeb0e3ec20bc56902409432e93ffc88fc82a4abf373fc9cf6042cccf0ab5bc3

This Update Triggers ArgoCD Because:

- ArgoCD polls the platform repository every 3 minutes (configurable)

- Git commit hash changes when configuration files are updated

- ArgoCD compares desired state (Git) vs actual state (Kubernetes)

- Differences trigger automatic synchronization and deployment

- Health checks ensure successful deployment completion

Repository Structure:

platform-repository/

├── applications/

│ ├── fe-studio-server/

│ │ ├── base/

│ │ │ ├── kustomization.yaml

│ │ │ └── deployment.yaml

│ │ ├── overlays/

│ │ │ ├── dev/

│ │ │ ├── staging/

│ │ │ └── production/

│ └── other-services/

└── argocd-apps/

├── dev-apps.yaml

├── staging-apps.yaml

└── prod-apps.yaml

ArgoCD Kustomization & Kubernetes Application

How ArgoCD Uses Kustomization

ArgoCD leverages Kustomize to build and apply Kubernetes manifests dynamically. This provides environment-specific customizations while maintaining a single source of truth.

Kustomization Build Process:

- Base Resources: ArgoCD starts with base Kubernetes manifests

- Overlay Application: Environment-specific overlays are applied

- Image Updates: Image tags and digests are replaced with current versions

- Resource Patching: Environment-specific configurations are applied

- Manifest Generation: Final Kubernetes YAML is generated

- Cluster Application: Generated manifests are applied to the target cluster

Base Configuration Structure

Base Kustomization (base/kustomization.yaml):

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

metadata:

name: fe-studio-server-base

resources:

- deployment.yaml

- service.yaml

- configmap.yaml

- serviceaccount.yaml

commonLabels:

app: fe-studio-server

version: v1.0.0

commonAnnotations:

maintainer: "platform-team@company.com"

description: "Frontend Studio Server Application"

# Resource limits and requests

patches:

- target:

kind: Deployment

name: fe-studio-server

patch: |-

- op: replace

path: /spec/template/spec/containers/0/resources

value:

requests:

memory: "256Mi"

cpu: "100m"

limits:

memory: "512Mi"

cpu: "200m"

Base Deployment (base/deployment.yaml):

apiVersion: apps/v1

kind: Deployment

metadata:

name: fe-studio-server

spec:

replicas: 2

selector:

matchLabels:

app: fe-studio-server

template:

metadata:

labels:

app: fe-studio-server

spec:

serviceAccountName: fe-studio-server

containers:

- name: app

image: p6m.jfrog.io/ybor-studio-docker-local/applications/fe-studio-server:latest

ports:

- containerPort: 8080

name: http

env:

- name: ENVIRONMENT

value: "base"

livenessProbe:

httpGet:

path: /health

port: 8080

initialDelaySeconds: 30

periodSeconds: 10

readinessProbe:

httpGet:

path: /ready

port: 8080

initialDelaySeconds: 5

periodSeconds: 5

Environment-Specific Overlays

Development Overlay (overlays/dev/kustomization.yaml):

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

namespace: fe-studio-dev

resources:

- ../../base

# Image updates with specific digest

images:

- name: fe-studio-server

newName: p6m.jfrog.io/ybor-studio-docker-local/applications/fe-studio-server

digest: sha256:aeb0e3ec20bc56902409432e93ffc88fc82a4abf373fc9cf6042cccf0ab5bc3

# Environment-specific patches

patches:

- target:

kind: Deployment

name: fe-studio-server

patch: |-

- op: replace

path: /spec/replicas

value: 1

- op: replace

path: /spec/template/spec/containers/0/env/0/value

value: "development"

- op: add

path: /spec/template/spec/containers/0/env/-

value:

name: DEBUG_MODE

value: "true"

# Development-specific ConfigMap

configMapGenerator:

- name: fe-studio-config

literals:

- LOG_LEVEL=debug

- API_ENDPOINT=https://api-dev.company.com

- CACHE_TTL=30s

Production Overlay (overlays/production/kustomization.yaml):

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

namespace: fe-studio-prod

resources:

- ../../base

# Production image with verified digest

images:

- name: fe-studio-server

newName: p6m.jfrog.io/ybor-studio-docker-local/applications/fe-studio-server

digest: sha256:c4f3f1c9d8e2a5b6f7e8d9c0a1b2e3f4a5b6c7d8e9f0a1b2c3d4e5f6a7b8c9d0

# Production-specific configuration

patches:

- target:

kind: Deployment

name: fe-studio-server

patch: |-

- op: replace

path: /spec/replicas

value: 3

- op: replace

path: /spec/template/spec/containers/0/env/0/value

value: "production"

- op: replace

path: /spec/template/spec/containers/0/resources

value:

requests:

memory: "512Mi"

cpu: "200m"

limits:

memory: "1Gi"

cpu: "500m"

# Production ConfigMap

configMapGenerator:

- name: fe-studio-config

literals:

- LOG_LEVEL=info

- API_ENDPOINT=https://api.company.com

- CACHE_TTL=300s

- METRICS_ENABLED=true

# Production-specific HPA

resources:

- hpa.yaml

ArgoCD Application Configuration

ArgoCD Application Manifest (argocd-apps/dev-apps.yaml):

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: fe-studio-server-dev

namespace: argocd

finalizers:

- resources-finalizer.argocd.argoproj.io

spec:

project: default

source:

repoURL: https://github.com/platform-team/platform-configs

targetRevision: HEAD

path: applications/fe-studio-server/overlays/dev

destination:

server: https://kubernetes.default.svc

namespace: fe-studio-dev

syncPolicy:

automated:

prune: true

selfHeal: true

syncOptions:

- CreateNamespace=true

- ApplyOutOfSyncOnly=true

retry:

limit: 5

backoff:

duration: 5s

factor: 2

maxDuration: 3m

Kubernetes Application Process

When ArgoCD Applies Changes:

- Repository Polling: ArgoCD polls the Git repository every 3 minutes

- Change Detection: Compares current Git commit hash with last processed hash

- Kustomization Build: Runs

kustomize buildon the target overlay - Manifest Comparison: Compares generated manifests with cluster state

- Resource Application: Applies differences using

kubectl apply - Health Validation: Monitors resource health and rollout status

- Sync Completion: Reports success/failure status

Applied Resources Flow:

Resource Management Features

Automatic Pruning:

- ArgoCD removes resources not present in Git manifests

- Prevents resource drift and ensures clean deployments

- Configurable via

prune: truein sync policy

Self-Healing:

- ArgoCD continuously monitors resource state

- Automatically reverts manual changes back to Git state

- Ensures configuration consistency across environments

Progressive Sync:

- ArgoCD can perform rolling updates with zero downtime

- Monitors deployment rollout status and health

- Automatic rollback on health check failures

Resource Hooks:

- PreSync: Execute before main sync (database migrations)

- Sync: Normal resource application

- PostSync: Execute after sync completion (tests, notifications)

- SyncFail: Execute if sync fails (alerts, cleanup)

Benefits of Kustomization with ArgoCD

Environment Consistency:

- Same base configuration across all environments

- Environment-specific overlays ensure proper customization

- Eliminates configuration drift between environments

Image Management:

- Immutable image digests ensure exact deployments

- Centralized image updates across multiple resources

- Full traceability from source code to running containers

Configuration Management:

- Environment-specific secrets and config maps

- Patch-based configuration changes

- Validation and testing of configuration changes

Operational Excellence:

- GitOps workflow with full audit trail

- Automatic synchronization and health monitoring

- Self-healing capabilities for infrastructure resilience

Docker Container Handling

Container Build and Push Process

# Docker container workflow

jobs:

build-and-push:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v4

# Authentication step

- uses: p6m-actions/docker-repository-login@v1

with:

registry: ${{ vars.CONTAINER_REGISTRY }}

username: ${{ vars.DOCKER_USERNAME }}

password: ${{ secrets.DOCKER_TOKEN }}

# Build and push after authentication

- name: Build and push Docker image

run: |

docker buildx build \

--platform linux/amd64,linux/arm64 \

--tag ${{ vars.CONTAINER_REGISTRY }}/app:${{ github.sha }} \

--tag ${{ vars.CONTAINER_REGISTRY }}/app:latest \

--push .

Container Security and Scanning

- Image Scanning: Automated vulnerability scanning before deployment

- Registry Security: Private registry with access controls

- Multi-platform Builds: Support for AMD64 and ARM64 architectures

- Image Signing: Container image signing for supply chain security

Repository Release Process

Development Branch Strategy

The repository release process creates structured branches to support ongoing development and maintenance:

Branch Management

-

Release Branch Creation

- Created for each major version release

- Provides stable base for production deployment

- Enables parallel development on main branch

-

Dev Branch for Hotfixes

- Automatically created from release branch

- Supports critical bug fixes and patches

- Maintains isolation from ongoing development

-

Frozen Codebase Snapshot

- Release branch represents exact production state

- Immutable reference for debugging and rollbacks

- Complete audit trail for compliance

-

Future Modifications Support

- Structured approach for post-release changes

- Clear branching strategy for emergency fixes

- Maintains code integrity and traceability

GitHub Actions Integration

- Specifically designed for GitHub Actions - not application deployment pipeline

- Automated branch creation during release process

- Integration with repository-release action for version management

- Webhook notifications for downstream systems

Best Practices

Deployment Safety

- Blue-Green Deployments: Zero-downtime deployments with traffic switching

- Canary Releases: Gradual rollout with monitoring and automatic rollback

- Health Checks: Comprehensive application and infrastructure health validation

- Smoke Tests: Post-deployment validation of critical functionality

Release Management

- Semantic Versioning: Consistent version numbering across all components

- Change Management: Structured approval processes for production changes

- Communication: Automated notifications for deployment status updates

- Documentation: Automatic generation of release notes and change logs

Security Considerations

- Secrets Management: Secure handling of deployment credentials and API keys

- Access Controls: Role-based access for deployment approvals and execution

- Audit Logging: Complete audit trail for all deployment activities

- Compliance: Adherence to regulatory requirements and security policies

Related Resources

- Actions Library - Reusable deployment actions and components

- Workflow Examples - Complete deployment workflow templates

- Platform Infrastructure - ArgoCD and Kubernetes infrastructure

- Troubleshooting - Common deployment issues and solutions